Only distributed fact-checking can keep up with democratized distribution

Has social media democratized reporting as promised? We’ve democratized writing and publishing but this year has made the point that there’s more to journalism than hitting send. The rest of the stuff that happens in the newsroom has stayed in the newsroom: cultivating sources, editing for quality, and most importantly, fact-checking.

Lacking checks, social media is amplifying or at least fertilizing false beliefs, and then people cross state lines to shoot up pizza joints. If the newsroom’s adversarial functions can’t make the jump to social media, life will continue to be this weird.

We’re starting to address fact-checking, and will do more. Failing to curb antisocial behavior kills companies, as well as communities.

Facebook has the technology to filter out the worst-quality content that their users think is ‘P(bad for the world)’ – but only at the cost of lower engagement. Given these incentives, nobody benefits if we leave this to platforms to solve. Users should bring their own moderation to the web.

- Private groups will decamp if they don’t like the fact bubbles

- Conspiracies are topics too

- Cranky boomers R us

- BYO vs centralized moderation

- Information also has professional customers

- Conclusions

- Appendix: the unabomber

- Appendix: thedonald

Private groups will decamp if they don’t like the fact bubbles

Filter bubbles don’t have to put up with fact bubbles. By filter bubbles, I mean clique groups that see mostly content that supports their priors. By fact bubbles, I mean those blue exclamation marks that say ‘official sources have called the election differently’.

Private groups have the power to resist centralized moderation if they don’t like it. Private groups are sticky which is why facebook bet their future on them, but this stickiness makes them hard to control. Yes, their internal cohesion leads to engagement on FB, but it also allows them to move off the platform if they’re unhappy. They own their slice of the network; their moat has a drawbridge.

A heavy hand will drive private groups to dark corners of the web – not un-moderated exactly, because trust me, nobody wants that. But ‘differently moderated’. Reality is a participation sport and groups which don’t want to participate, won’t.

That said, deplatforming isn’t zero-cost for groups: they recruit in public spaces, and are helped by ‘suggested groups’ features (which FB partially disabled for the election). Standalone forums can’t grow like that. The fickle graces of hosts and DDOS-protection companies also make it hard to go solo.

But it’s not like IRL networks will stop feeding online groups. The counterargument to ‘social media gives bad politicians a voice’ is that once someone has been elected, they’ll probably have a voice anyway. FB’s design gives eyeballs to demagogues but so did Leni Riefenstahl.

As I drafted this in early november, twitter was fact-bubbling multiple tweets in a row from the president, the ‘uncensored’ social media app parler trended to #1 in apple’s store, and local hero Bill Shatner took up the cause of moderation to oppose them. The ‘moderation drives exodus’ hypothesis is plausible.

But parler can’t really want to be unmoderated. Their debut came with a quick correction that despite the sales pitch, your username can’t be cumdumpster. (Twitter has allowed this since at least 2013).

My point: nobody wants to live in a trash can. Every community has some kind of semi-official management. I think even 8kun revoked someone’s access to the Q account after they had a falling out (admittedly, not over content I think, and also I don’t really understand this world). But the forms of moderation we invent have to be trusted or at least tolerated, or people will ditch.

Fact-checking, if it’s trustworthy and useful, will be embraced IMO. But it can’t be imposed.

Conspiracies are topics too

A swedish friend, trying to explain why their far-right party gained power in the Riksdag, said something like ‘It’s not that we’re all nazis, but nobody else would even talk about immigration, and it’s a real problem’.

I like the ‘marketplace of ideas’ as a concept.1 Markets are an interesting model for public discourse. Like goods on markets, there are a limited number of explanations or ideologies available (assuming most people can’t coin our own – for various reasons, I believe we can’t). Also, like a real market, it’s two-sided, in that in addition to the ideas on offer, there are buyers: people looking for an action, belief, or political party that suits them.

The marketplace model explains why political parties get to lie out loud, be revealed for hypocrisy, and admit their strategy is disingenuous. They’re still the only rallying point for ‘critical idea X’ that their buyers need. It may not matter to the fighting faithful if the center is hollow. They’re willing to ignore the cognitive dissonance.

I wonder if there’s a similar effect in the antivax movement – autism seems to really be on the rise (0.7 to 1.5% in this century), and if your family is affected by this, and there’s no other explanation, you ‘buy’ the idea that comes with a clear cause and a solution.

Cranky boomers R us

People are seeking answers that are plausible to them, but may not be able or willing to dig deeply, and may not be ready to hear the truth if it means abandoning their hope, identity, or existing opinions. I suspect if someone is at the bottom of a rabbit hole and sees a fact bubble saying ‘this is stupid’, that’s the same as saying ‘you’re stupid’, and they won’t listen. The challenge here is matching them with a well-meaning authority whom they’ll trust and find useful.

There was a measles outbreak in Minnesota enabled by high vaccine refusal rate in a single immigrant community. Parents noticed a lot of their kids in special autism education2, then fell prey to antivax lecturers or something. But the parents had access to doctors. And presumably they had access to other experts at the autism schools. They ignored the fact bubble. They chose who to trust.

On the flip side, community-owned moderation sometimes works rather well. I’m thinking about subreddits, who tend to have very specific rules about what to post, and their users accept that these communities are actively moderated for the benefit of the community. (Given that the community is a result of moderation, this is a little circular and feedback loop-y, but that may not be a bad thing).

I asked a friend who mods a small subbredit what it’s like: “In six years, no one has ever said anything mean on my subreddit. I’ve never had to ban anyone and submitters and subscribers are respectful.”

Cranky boomers got us into this mess by believing everything they read on facebook. Maybe Cranky-Boomers-R-Us™️ is the right branding for a community fact check to filter out some fast-spreading egregious claims.

We train people to consider the source, but mainstream mastheads like NYT feel ideologically hostile to readers who aren’t centrists over 55. Their news coverage can be accurate without being neutral – factual claims in articles are fact-checked, but that’s mixed with interpretive conclusions. The op-eds are far stranger.

I don’t blame people for switching to ideologically comfortable news. I just wish they had a fact-check that was ideologically comfortable, but also competent and reputation-bound, to go along with it.

Repentant neocon Francis Fukuyama writes about social trust now and is worried about post-fact world where “all authoritative information sources are challenged by contrary facts of dubious quality and provenance”. Readers have reasons for straying from reality. Let’s give people an ideologically comfortable way to come back.

Gentler public health messaging that accepts people’s lifestyles can have higher efficacy; I suspect the same is true of fact-checks. Our goal isn’t to change minds; it’s to improve outcomes.

BYO vs centralized moderation

We need a market ecosystem of moderation vendors who provide different kinds of value to different kinds of users. A moderation marketplace is good for platforms because they don’t want to be the arbiter of truth, they just want users to have a good experience. (Platforms will still have some policing to do on their own).

It’s good for users because they’re not forced into anything; they can pick which web they want to dwell on. This may sound like a filter bubble, where ‘everyone has their own truth’, but moderation vendors will still need to maintain their reputation for being right – whatever their ideological alignment. Moderation vendors, unlike cable news channels, don’t produce content; they just react to it and grade it. BYO moderation isn’t BYO newspaper, it’s BYO snopes / politifact.

Centralized moderation has problems:

- The costs are asymmetric, meaning it’s too expensive to do widely, well, and fast (see lego point below). Jimmy Wales of wikipedia describes the economics here leading to a sweatshop model.

- It’s susceptible to abuse by platforms and institutions and therefore will be less trusted by users, especially the more conspiracy-minded ones whom we want to deradicalize. Note I’m not saying ‘prone to abuse’ or ‘untrustworthy’. Just less trust-ed, and less able to balance minority viewpoints in a way that feels authentic to minorities.

- Private groups will turn off mod systems if not effective / ergonomic / trustable, or else deplatform.

- For messy issues, cookie-cutter moderation can support violence by hiding its proof. Or like where’s the parler for breastfeeding?

Every community needs moderation. Some get away without it because they moderate membership rather than content – small workplaces, for example, have relatively informal content moderation processes, but can fire you.

This isn’t just a facebook / twitter issue anymore – it’s a problem for anyone with a supply of eyeballs and UGC or DM features, like pinterest and linkedin. The older and funnier version of this is the lego MMO’s penis problem – they scrapped their MMO specifically and entirely because of the cost of moderation. A section 230 expert, talking about what happens in case of a 230 repeal, observes that sites which don’t do any moderation in 2020 are unusable.

The need to moderate isn’t a new problem. Philosopher of science Karl Popper coined Popper’s paradox while thinking about the risks of tolerating intolerant views, and the parsing of arguments that happens when bad people ask for equal time. Our problems wouldn’t surprise him.

BYO is better because:

- The costs are borne by users. The model can be crowd-funded research – for example, 10 people have offered $5 each to check a claim, that makes it worthwhile for a researcher to take on.

- The dispute & appeal processes can be more transparent and community-owned. I know people working on safety systems for dating apps, and the user feedback driving this is that the report process is opaque, dissatisfying, and ineffective. It’s the same on social.

- Your ability to shop for a vendor leads to a customer service mentality by vendors. It also makes users view fact-checking as a utility, i.e. something useful that they call on to resolve doubt.

- Ideological alignment and factual reputation will become separate axes. Even if I’m only looking for party-approved fact-checkers, I can still pick the best one.

- The plugin architecture can support moderation workflows that don’t exist today – for example, voluntary and amplified retraction.

Decentralized moderation is starting to exist in decentralized platforms, though it’s not exactly BYO. This knight foundation article on mastodon talks about self-hosted communities experimenting with alternative moderation rules. (The examples, gab + lolicon, aren’t inspiring). A proposed distributed reputation system for matrix uses external reputation feeds that you can subscribe to. Whatsapp is testing out meedan’s ‘check’ tool which has some hybrid human / computer stuff but seems to not be community-driven.

Ghost Knowledge is another example of a pull-based, paid, public question-answering system.

Information also has professional customers

Complex and open-minded thought is most likely to be activated when decision makers learn prior to forming any opinions that they will be accountable to an audience (a) whose views are unknown, (b) who is interested in accuracy, (c) who is reasonably well-informed, and (d) who has a legitimate reason for inquiring into the reasons behind participants’ judgments/choices.

– Philip Tetlock summarized in Annie Dukes’ Thinking in Bets

Misinformation online is often presented as a problem of preventing bad decisions based on bad information – don’t cross state lines to shoot up a pizza joint, don’t skip your vaccine. But there are people who are trying to make good decisions who need good information.

There are topics like flat earth theory, where there’s no real controversy over what’s at issue. But there’s also dark matter, where observational evidence is hard to get and nobody is quite sure why galaxies rotate the way they do. As a non-astronomer, a better-moderated internet would help me to stay up to date on, say, MOND, an alternate explanation of galaxy-scale physics.

I read about the 1920s Shapley-Curtis Debate over ‘spiral nebulae’: were they tiny suburbs of the milky way, or ‘island universes’ (a term coined by Kant), equal in scale and very far away. Shapley came armed with observations, later proven false, that the messier object M101 was rotating at a speed that would violate the speed of light were it very far. This wasn’t settled until Hubble studied cepheid variables a few years later.

Flat earthers have experiments on their website you can try yourself. I heard an interview with someone from their group where he said “the flat earth society places an emphasis on personally experienced evidence”. As if some polymath hadn’t proven this to our satisfaction millions of years ago.

Your time and expertise are limited. You can’t get a master’s degree in everything interesting or relevant to your life. Nobody has time to seek independent truth; even experts in ancient democracy are trusting sources.

I really believe everyone has some interest in and value for correct information. But organizations that make large bets elevate truth-seeking as a core function. (For example, and not to boost ray dalio who is doing a book tour and seems like a windbag, but bridgewater’s culture of radical transparency). Orgs that suppress internal truth-finding get turned into salad by the competition. Political scientist Caitlin Talmadge writes about how this phenomenon can hobble armies.

If we build tools to help filthy causals like me have useful + realistic beliefs about the world, the same tools will help information professionals have up-to-date beliefs. I have a book waiting on my kindle about the half life of facts which claims that compared to 40 years ago, valid beliefs become invalid more quickly. Tooling to help us realign when that happens would make us stronger decision-makers, and may be similar in broad outline to BYO moderation tools.

In the same way that I have a ‘like’ button, I might like a ‘believe’ button that lets me mark a stat or claim I’m taking to heart, not publicly, but so that I can subscribe to retractions, updates, explanations.

To forward plan you have to have beliefs. It’s better if those beliefs are correct. ‘A thing you believed is now false’ should be the highest-ranked update on my timeline.

Conclusions

Every year, if not every day, we have to wager our salvation upon some prophecy based upon imperfect knowledge

– Oliver Wendell Holmes

But this is not all which distinguishes doubt from belief. There is a practical difference. Our beliefs guide our desires and shape our actions. The Assassins, or followers of the Old Man of the Mountain, used to rush into death at his least command, because they believed that obedience to him would insure everlasting felicity. Had they doubted this, they would not have acted as they did. So it is with every belief, according to its degree. The feeling of believing is a more or less sure indication of there being established in our nature some habit which will determine our actions. Doubt never has such an effect.

– Peirce, The Fixation of Belief

Information quality is absolutely a life or death issue: in questions of public health, in questions of war, in motivating lynch mobs.

I saw a tweet from a favorite blogger that summarized my feelings about public information in 2020:

the info most people have seems to be 4-N months behind what laypeople with 3 hours+pubmed knew in January.

The thing i wouldn’t have guessed in 2003 is that informative indie bloggers would be rendered irrelevant by “social” algorithms that funnel people to clickbait.

When we say information is ‘viral’ online we’re talking about the pace and pattern of its spread, but I wonder if there’s another way that information is viral on social media: when it’s packed into a small capsule, and doesn’t contain its full argument, it can only reproduce by destroying its host.

Thinking about just the user-facing features of social media, it’s 99% spread + engagement features now, with a few moderation and correctness features in the form of ‘report’ buttons. ‘Social with moderation’ will be a different medium. It will change our relationship with the truth, hopefully for the better.

New media has always created post-truth realities and that’s not always a bad thing. Although the church was Gutenberg’s best customer, printing also enabled the reformation. (And one of Luther’s complaints in 1517 was the sale of indulgences, which was also enabled by print tech).

Later Galileo would be arrested for publishing a book. We say lots of bad things online, but we also say some good things that are controversial because they’re true, and create change that is scary to the status quo but ultimately good. It can be tempting to resist change and maintain the world in its current shape. Eppur si muove.

Appendix: the unabomber

This is a letter written by the unabomber, who’s now in jail, in response to an admirer I guess. The pen pal is skeptical that another terrorist, the oklahoma city bomber, could have destroyed the murrah building, and was writing to talk about a pet conspiracy theory.

The unabomber’s main points in response are “you should read” Farhad Manjoo’s book about post-fact societies, “if you have access to a law library” check out the opinion against mcveigh, and that the pen pal’s argument about the explosion is wrong in a way you can check in the media.

How do you know there was a “strong consensus” of experts? Did you go around and interview the experts yourself? Or are you taking someone else’s word for it? There’s just too much bullshit out there

You can also get judicial opinions off the internet though I don’t know exactly how

Federal judges are not above lying when they find it convenient but, even so, the judicial opinion is probably a lot more reliable than whatever sources you’ve used

I think Kaczynski fascinates people who study extremism professionally. The linked tweet is from an expert I think on the aum shinrikyo cult that released sarin gas on the tokyo subway. Another extremism researcher published a book this year and has been posting updates about how his new book is charting next to Kaczynski’s manifesto, which is #31 in amazon’s terrorism bestseller list. (It’s also #3).

Appendix: thedonald

(Update 1/8/2021)

Welp 2 months later, a bunch of angry people from message boards forced their way into the US capitol building and disrupted an important vote.

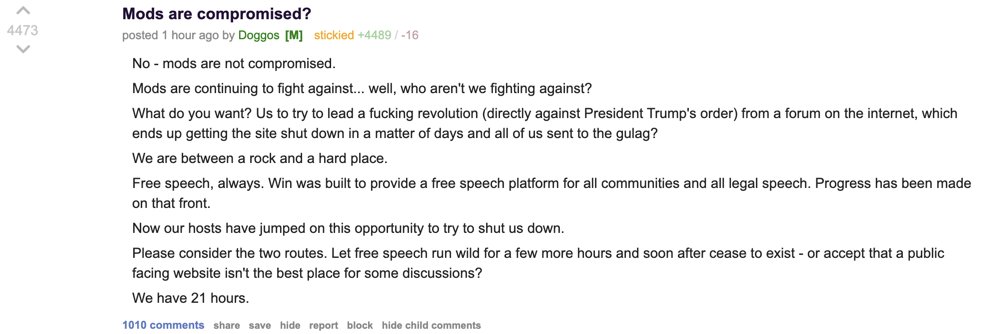

This is a mod thread at thedonald, a community that was kicked off reddit, to try to get their users to tone it down before their web host deplatforms them. I found it via an extremism researcher at mediamatters.

They understand very well the tradeoffs of public / private groups:

Let free speech run wild for a few more hours and soon after cease to exist – or accept that a public facing website isn’t the best place for some discussions

Note the mod’s username is ‘doggos’, which somehow is a cherry on top.

I have no idea what the consensus on anything will be in a week. In the 24 hours since I wrote the first draft of this, trump followers in the internet’s semiprivate corners have begun to talk like he betrayed them (per a buzzfeed reporter, an NBC reporter), we’re all learning about how these groups form beliefs and organize bus trips, and twitter banned him permanently after banning him temporarily to try and get him to apologize.

An upset fan (note pede is ‘centipede’, not ‘pedophile’, I’m told):

I have been a pede since 2015. … he has now abandoned us and disrespected everything we believe in.

The twitter ban announcement is interesting because the recent tweets look innocuous (albeit deranged). They had to read the memes, and wade deep into communities that parse the language here, to decide whether it was an exhortation of violence. The assumed it was a dog whistle, looked at how it was being interpreted, and acted accordingly.

This is a new philosophy of enforcement I think.

The twitter post also talks about their detection on their platform of operational planning for a second attack.

Experts on extremism and online stuff have found their voice. Some interesting one liners from this week:

- Matt Grossmann thinks both-sidesism is dangerous when one side is ignoring dangerous realities

- Matt Blaze notes trust in insitutions is a non-technical, but still key, component of election integrity

Lots of extremism researchers are making fun of officials who say ‘this isn’t america’ with rebuttals like ‘where do you think it is’. Statements like ‘you mustn’t storm the capitol because we have a system of laws’ ring hollow now. The counterargument is ‘we can have a system of laws because people don’t storm the capitol’, but now they have.

Congress has woken up to this because congress is personally affected, in the same way leaders decide gay people should have rights because they finally met one. It would be great to have a competent governing system that can realize what’s happening in the world without personally experiencing it. Remember who else places a premium on ‘personally experienced evidence’? Flat-earthers.

trump followers are upset. They feel like he left them hanging. Those feelings are a plausible outcome of indirect and dishonest leadership with no endgame.

Congress has been the victim of an armed assault, and going forward platform moderation will have to intersect counter-terrorism. But the perpetrators of the assault (and not to defend anyone) are victims of being lied to.

If the storm the capitol crew has an interest in not being lied to this way again, they may want to invest in some trustworthy fact checking.

Notes

See also Fukuyama’s ‘middleware’ concept.

-

I recently found out this metaphor, if not the exact phrase, probably traces back to SCOTUS judge Oliver Wendell Holmes. He used it in his famous dissent in Abrams. The other justices went to his house before he published it and tried to change his mind on the grounds that unchecked speech would poison the nation. He also had an important ‘but’ in there: “we should be eternally vigilant against attempts to check the expression of opinions that we loathe and believe to be fraught with death, unless they so imminently threaten immediate interference with the lawful and pressing purposes of the law that an immediate check is required to save the country.” ↩

-

Measles sweeps an immigrant community targeted by anti-vaccine activists, helen branswell, statnews ↩